Google and its video sharing app YouTube outlined plans for handling the 2022 U.S. midterm elections this week, highlighting tools in its disposal to limit the effort to limit the spread of political misinformation.

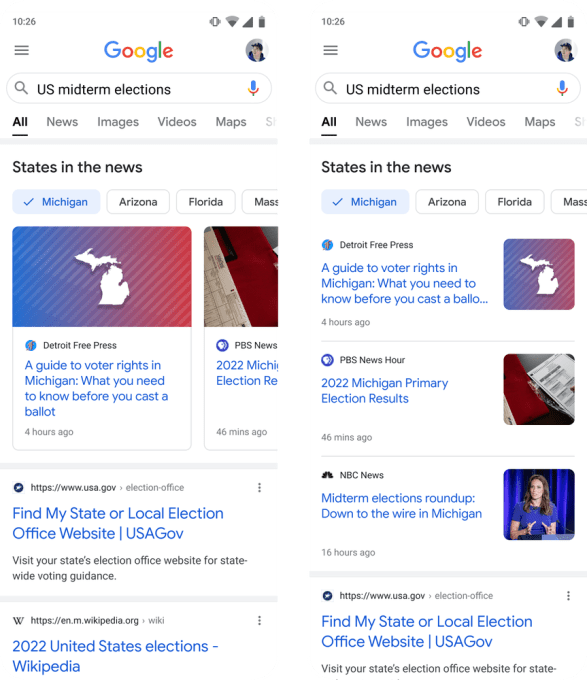

When users search for election content on either Google or YouTube, recommendation systems are in place to highlight journalism or video content from authoritative national and local news sources such as The Wall Street Journal, Univision, PBS NewsHour, and local ABC, CBS, and NBC affiliates.

In today’s blog post, YouTube noted that it has removed “a number of videos” about the U.S. midterms that violate its policies, including videos that make false claims about the 2020 election. YouTube’s rules also prohibit inaccurate videos on how to vote, videos inciting violence and any other content that it determines interferes with the democratic process. The platform adds that it has issued strikes to YouTube channels that violate policies related to the midterms and have temporarily suspended some channels from posting new videos.

Image Credits: Google

Google Search will now make it easier for users to look up election coverage by local and regional news from different states. The company is also rolling out a tool on Google Search that it has used before, which directs voters to accurate information about voter registration and how to vote. Google will be working with The Associated Press again this year to offer users authoritative election results in search.

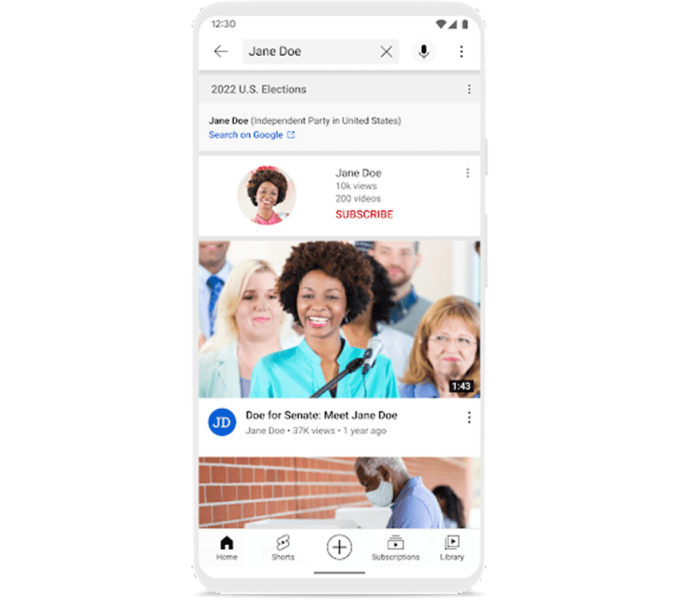

YouTube will also direct voters to an information panel on voting and a link to Google’s “how to vote” and “how to register to vote” features. Other election-related features YouTube announced today include reminders on voter registration and election resources, information panels beneath videos, recommended authoritative videos within its “watch next” panels, an educational media literacy campaign with tips about misinformation tactics.

On Election Day, YouTube will share a link to Google’s election results tracker, highlight live streams of election night, and include election results below videos. The platform will also launch a tool in the coming weeks that gives people searching for federal candidates a panel that highlights essential information, such as which office they’re running for and what their political party is.

Image Credits: YouTube

With two months left until Election Day, Google’s announcement marks the latest attempt by a tech giant to prepare for the pivotal moment in U.S. history. Meta, TikTok, and Twitter have also recently addressed how they will approach the 2022 U.S. midterm elections.

YouTube faced scrutiny over how it handled the 2020 presidential election, waiting until December 2020 to announce a policy that would apply to misinformation swirling around the previous month’s election.

Before the policy was initiated, the platform didn’t remove videos with misleading election-related claims, allowing speculation and false information to flourish. That included a video from One America News Network (OAN) posted on the day after the 2020 election falsely claiming that Trump had won the election. The video was viewed more than 340,000 times, but YouTube didn’t immediately remove it, stating the video doesn’t didn’t violate its rules.

In a new study, researchers from New York University found that YouTube’s recommendation system had a part in spreading misinformation about the 2020 presidential election. From October 29 to December 8, 2020, the researchers analyzed the YouTube usage of 361 people to determine if YouTube’s recommendation system steered users toward false claims regarding the election in the immediate aftermath of the election. The researchers concluded that participants who were very skeptical about the election’s legitimacy were recommended significantly more election fraud-related claims than participants who weren’t unsure about the election results.

YouTube pushed back against the study in a conversation with TechCrunch, arguing that its small sample size undermined its potential conclusions. “While we welcome more research, this report doesn’t accurately represent how our systems work,” YouTube spokesperson Ivy Choi told TechCrunch. “We’ve found that the most viewed and recommended videos and channels related to elections are from authoritative sources, like news channels.”

The researchers acknowledged that the number of fraud-related videos in the study was low overall and that the data doesn’t consider what channels the participants were subscribed to. Nonetheless, YouTube is clearly a key vector of potential political misinformation — and one to watch as the U.S. heads into its midterm elections this fall.